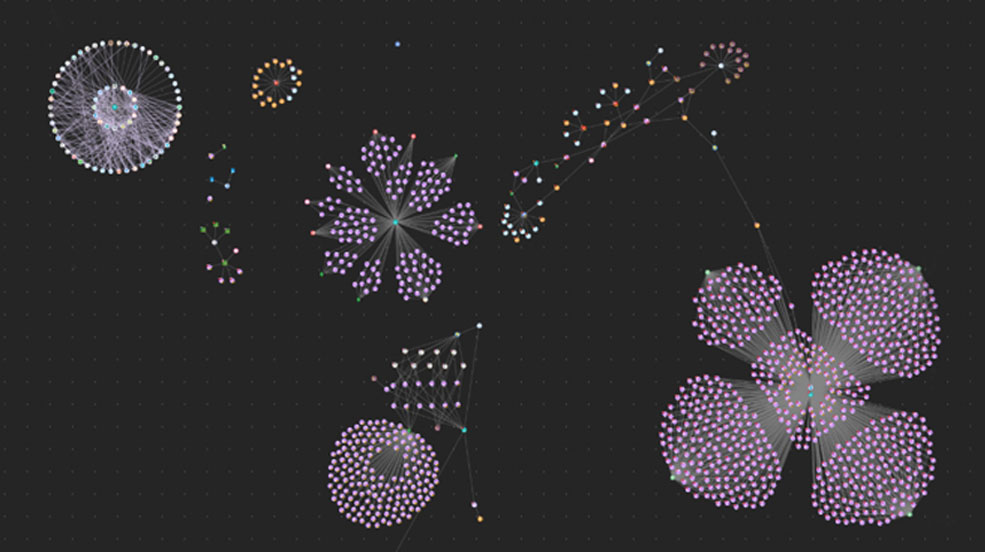

Pathfinder

Building an Enterprise Data Map

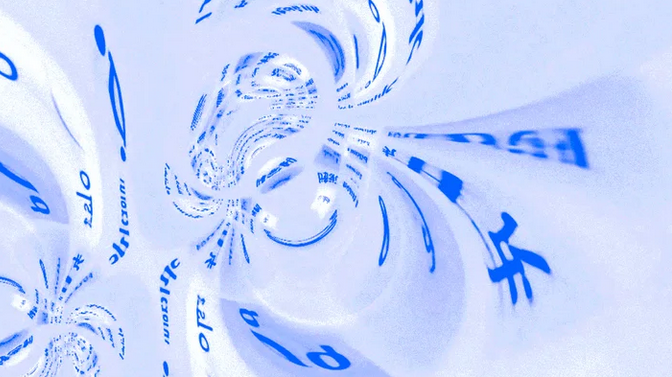

Deep Search

Creating searchable knowledge graphs from large document collections.

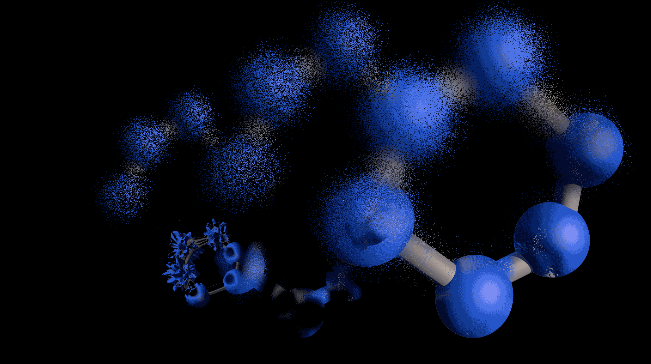

AI for Scientific Discovery

Creating the AI-enabled lab for a new era of experimentation.

Lab that Learns

Leveraging foundation models and multi-cloud computing for scientific discovery.

FlowPilot

Creating an LLM-Powered System for Enterprise Data

flex.data

Towards a composable, hybrid cloud data platform

Join our team

We are currently looking for highly motivated and enthusiastic software engineers and researchers.

Contact

Abdel Labbi

Department head